2023-08-12, Johannes Köppern

Let’s talk about a prompting technique that improves the correctness of answers of Large Language Models: The Chain of Thought prompting with self-consistency method is introduced in Self-Consistency Improves Chain of Thought Reasoning in Language Models, Wang et. al., 2022-04. It is a method to improve the quality of the answers from large language models in terms of their correctness compared to pure Chain of Thought, as the source proves with experiments.

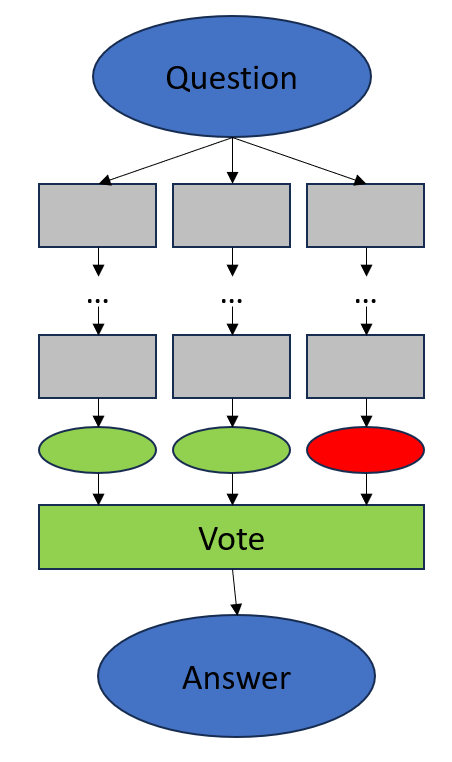

(Main idea) It is assumed that the correct answer is within in the language model and that this correct answer is returned from the language model in the majority of cases while repeatedly asking the model the same question. The iterative process, culminating in a choice of final answers, is illustrated in the figure below. In this example, a majority vote is used for decision-making, i.e., the answer that occurs most frequently is selected as the correct one. In any case, the Chain of Thought with Self-Consistency process ends in a choice that synthesizes one correct answer from all the answers found.

This article discusses the method briefly and then shows one option of how to implement it in Python.

Procedure

1. Add „think step-by-step“ to your original question (we’ll call this augmented question the question in the following).

2. Ask the question repeatedly (n times) and collect the answers.

3. Decide for a voting technique and decide which of the collected answers is picked as the final answer.

As a voting technique (see 3 in the list above) the most common answer could be chosen as the final answer. Again, the language model can select the most common theme along the answers by instructing it this way: *What is the most common theme among these statements? {list of the collected answers}*

The figure above shows this procedure as well.

Advantages / Performance gain

Chain of Thought with Self-Consistency helps to overcome 2 major problems of Large Language Models (LLMs) as the mentioned source describes. These partially overcome problems are:

1. **Repetitiveness and Local-Optimality in Greedy Decoding**: During text generation, LLMs pick at each step the most probable next word (with some randomness introduced). It’s called greedy because it always picks the next best option. This can lead to local optimality and missing the big picture since the model only thinks about which next word is the best one in the current context and not where it wants to get in the long run.

2. Stochasticity of a Single Sampled Generation: This mitigates the prior issue a bit but it introduces a new problem. Choosing the next token not only relies on the best choice for it but introduces some randomness. While this can give more diverse outputs on one hand, on the other it can also generate nonsensical ones.

Mitigating these problems with our technique results in these advantages:

| Advantage | Description | Quantification |

|---|---|---|

| Improved Performance | Self-consistency has been shown to significantly boost the performance of chain-of-thought prompting on various benchmarks, including GSM8K, SVAMP, AQuA, StrategyQA, and ARC-challenge. | Empirical evaluations have demonstrated performance improvements ranging from 3.9% to 17.9%. |

| Enhanced Reasoning | Self-consistency ensures that the model considers multiple perspectives and approaches to arrive at the correct answer. By leveraging the diverse set of reasoning paths generated through chain-of-thought prompting, this approach enhances the model’s reasoning capabilities and allows for a more comprehensive understanding of complex problems. | N/A |

Thanks to perplexity.ai/ for helping with this table.

How LLMs generate their answers

To delve deeper into the workings of Large Language Models (LLMs) — especially the encoding and decoding processes that are fundamental to them — I recommend the insightful blog post by Dr. Sebastian Raschka, Understanding Encoder And Decoder LLMs. For those looking for a quick overview:

Transformers, introduced in the paper Attention Is All You Need, primarily consist of an encoder and a decoder:

Encoder:

Tokenizes and translates the input text into embeddings.

Understands and extracts relevant information from the input.

Outputs an embedding passed to the decoder.

Commonly used for tasks like classification.

Decoder:

Generates output text based on its input.

Interestingly, models like GPT-3 have a decoder-only architecture. To get an overview of the architecture of some of today’s prominent LLMs, refer to a diagram in Dr. Raschka’s post.

**Why is this important for the Chain of Thought with Self-Consistency?**

In LLMs, the selection of the next token heavily relies on previous tokens. This choice is predominantly based on which token is deemed most probable. While there’s room for introducing some randomness, this approach can be viewed as greedy because it focuses primarily on the immediate next token, often neglecting a more long-term narrative or goal. By employing the Chain of Thought with Self-Consistency method, there’s a heightened probability for the LLM to produce more varied, and potentially more coherent, outputs.

Implementation

The following Python script implements Chain of Thought prompting with self-consistency and a majority-vote for the question how does Darwin’s evolution work?:

# Chain of Thought with Self-Consistency with majority vote demo

# Code written by OpenAI Code Interpreter

# 2023-08-07

import openai

from dotenv import find_dotenv, load_dotenv

import os

# Initialize OpenAI API with your key

load_dotenv(find_dotenv(), override=True)

api_key = os.environ.get('OPENAI_API_KEY')

openai.api_key = api_key

engine = "gpt-3.5-turbo"

def chain_of_thought_prompting(initial_prompt, iterations=5):

current_prompt = initial_prompt + ", think step-by-step"

messages = [

{"role": "system", "content":"you answer like a scientist in a brief and precise manner"},

{"role": "user", "content": current_prompt}

]

response = openai.ChatCompletion.create(

model=engine,

messages=messages,

max_tokens=150,

n=iterations,

)

responses = [choice['message']['content'] for choice in response['choices'] if choice['message']['role'] == 'assistant']

responses_string = "first answer: " + ', next answer:

Conclusion

The Chain of Thought with Self-Consistency (CoT-SC) method presents a promising avenue for refining the outputs of Large Language Models (LLMs). By introducing an iterative approach combined with self-consistency checks, this technique pushes LLMs to harness their vast knowledge base more effectively, producing more accurate and coherent responses. As AI and machine learning technologies continue to advance, such methodologies will be instrumental in achieving the utmost precision and reliability from our models. Readers are encouraged to delve deeper into the source materials linked in this post and explore the potential of CoT-SC in their AI endeavors.